A step into the spatial web:

The HTML model element in Apple Vision Pro

visionOS 26 brings a major update to an important building block for the spatial web: The HTML model element is enabled by default, with a new API that’s ready to use today.

The video above is an example of the model element in Vision Pro. You can visit the model element demos yourself on Vision Pro or in the visionOS Simulator.

Background

A new generation of computing devices offers the ability to create new, immersive experiences — and the web should be part of that. While WebXR and QuickLook are a great way to see spatial content, we believe there should be an easier way for existing websites to show traditional pages side-by-side with spatial content like 3D models. And while JavaScript libraries are doing a great job of creating an author-friendly API to integrate 3D content, we believe that open web standards that let browsers do the work of managing accessibility and privacy are a critical step toward a truly spatial web.

A simple API: Lights, Camera, Action

The model element is a proposal to do just that, by using a single HTML element that links to a 3D asset like a USDZ file. On a spatial platform like Apple Vision Pro, your users can see your model as a stereoscopically-rendered 3D object, just like it’s in a portal inside your web page. You can easily let users spin the model around with a single attribute. For more fine-grained control, authors can control a model’s scale, rotation, and position, manage its lighting environment, and control playback of an animation through a familiar playback API like <video> and <audio> elements. We’ve got more on USDZ files and how to make them at the end of the post.

The ready Promise

Any asset delivered over the web is going to take some time to get ready, and a 3D asset is no different. As a new feature on the modern web, model elements expose a ready Promise that you can await to ensure that all the work of downloading and processing the model contents has been completed and is available to work with in the browser:

<model id="teapot">

<source src="teapot.usdz" type="model/vnd.usdz+zip">

</model>

const teapot = document.querySelector('#teapot');

await teapot.ready;

Separate resources like lighting are signaled with another Promise – more on that later.

Orbit mode

One of the main things users do with 3D models online is explore them by rotating content to see from all sides. We wanted to make it easy to enable this interactivity, so it’s available by setting model’s stagemode to "orbit" . This is a signal that a pinch-and-drag in the horizontal axis will spin the model on its heading, and a vertical movement will pitch it up and down to see the top and bottom.

<model stagemode="orbit">

<source src="teapot.usdz" type="model/vnd.usdz+zip">

<img alt="a teapot for interacting with" src="fallback/teapot-orbit.jpg">

</model>

This provides an interaction scheme that’s similar to AR Quick Look, and the default “orbit” behavior in many 3D apps on iOS on iOS, macOS and visionOS. But if you want to implement custom behavior to meet more specific needs, you can do it by modifying the entityTransform directly.

EntityTransform

In most 3D apps, the view is controlled with a camera, which specifies both the position that the image is viewed from, as well as details like the zoom level or Field of View (FoV). Because an in-line 3D model appears as a real 3D model, however, the “camera” is the user’s actual eyes – meaning that FoV and other traditional camera properties need to match the spatially-correct values for a model to appear correctly. To control the rest of these attributes, a model allows you to translate, rotate, and scale the scene around using the entityTransform attribute, which takes a DOMMatrix.

What is the DOMMatrix?

Even if you’ve never heard of DOMMatrix, you’ve probably used one before – it’s the object type created for CSS transforms, and already has an extensive API for creating them in Javascript too:

teapot.entityTransform = new DOMMatrix().translate(0, 0, -0.5).rotate(0,90,0);

This sets the teapot back 0.5 meters in the Z-axis, and rotates it 90º on the Y-axis. You can build a matrix with the helper methods, but will need to be careful about the order of the transformations to make it work.

Initial size and bounding box information

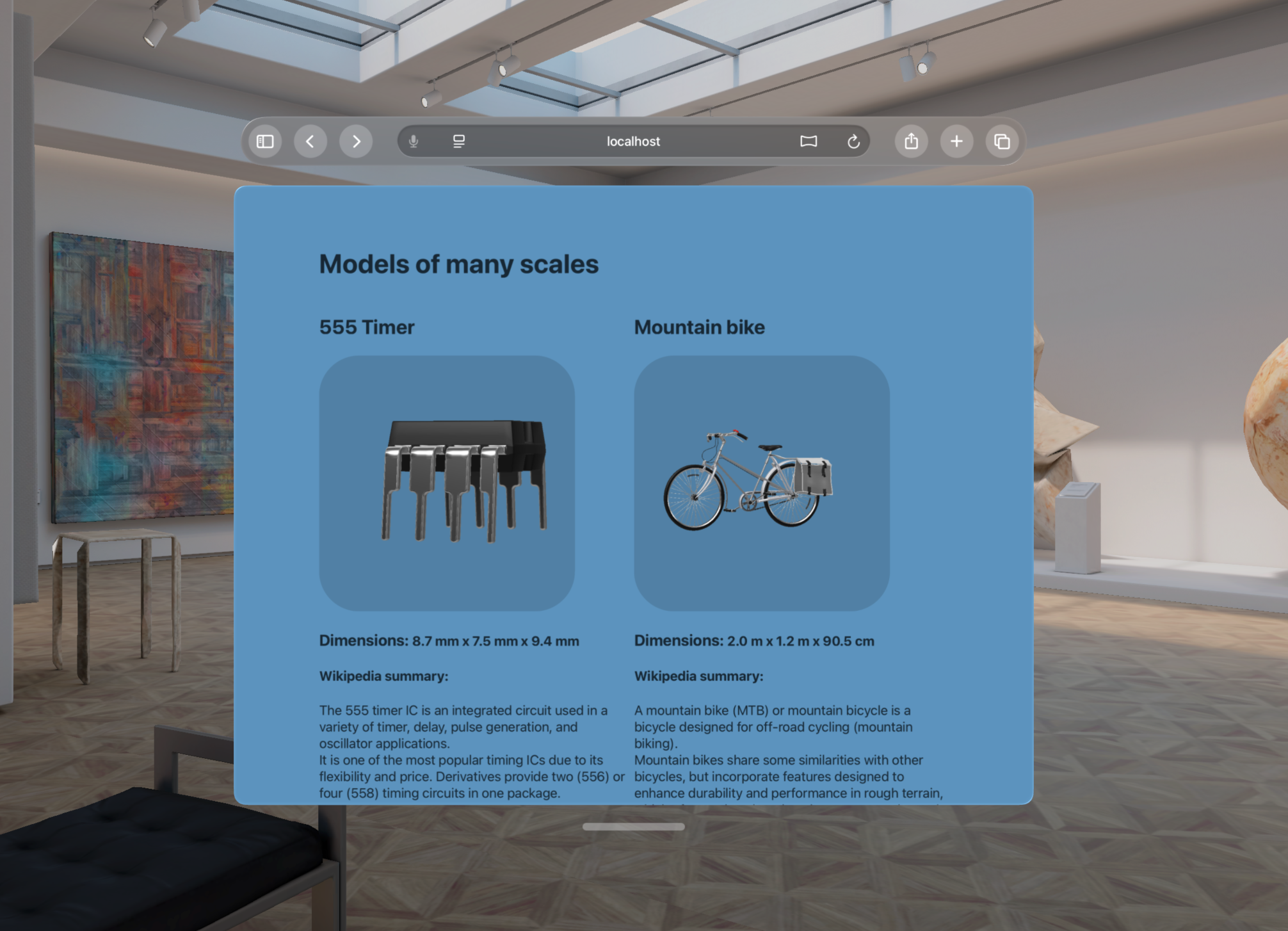

A model file contains 3D data about one or more objects, which could be any size – from a microchip to a mountain bike. They can also be centered on any position: A character from a video game may be centered on the character’s feet, while a 3D object captured on your iPhone might be centered on the initial position of the phone at the time. To make it easier to work with, the initial entityTransform is aligned and scaled to fit the scene within the visible viewport. Once loaded, a model has boundingBoxCenter and boundingBoxExtents, specified as DOMPointReadOnly values. You can also use these to set the entityTransform yourself.

The derived bounding box information presented inside the Simulator.

Manage lighting with environmentMap

Next, for a model to look realistic, it needs to look like it’s lit by a realistic scene. The industry standard for doing that is by providing an environment map. This is an image of all the light that would illuminate an object, including not just direct “lights” like the sun but the light reflected back from the ground and other surfaces as well. Because the bright lights in an environment map are so much brighter than the rest of scene – often hundreds of thousands of times brighter – this “Image-Based Light” is best presented in a “High-dynamic Range” (HDR) format. Popular formats for representing HDR images are the OpenEXR format and the Radiance HDR format.

A neutral environment map is provided by default, and authors can specify a custom one by setting the environmentmap attribute on the element. Environment maps need to be supplied in the “equi-rectangular” projection, similar to the way a world map is drawn today:

You can make these resources yourself in tools like Blender. Websites like Polyhaven have a long list of Creative Commons environment maps in a range of sizes, and available in both HDR and EXR format. Because these files can also be large, it’s important to listen for their availability and load status – you can await the environmentMapReady Promise to guarantee that it’s downloaded and ready to contribute to rendering your content.

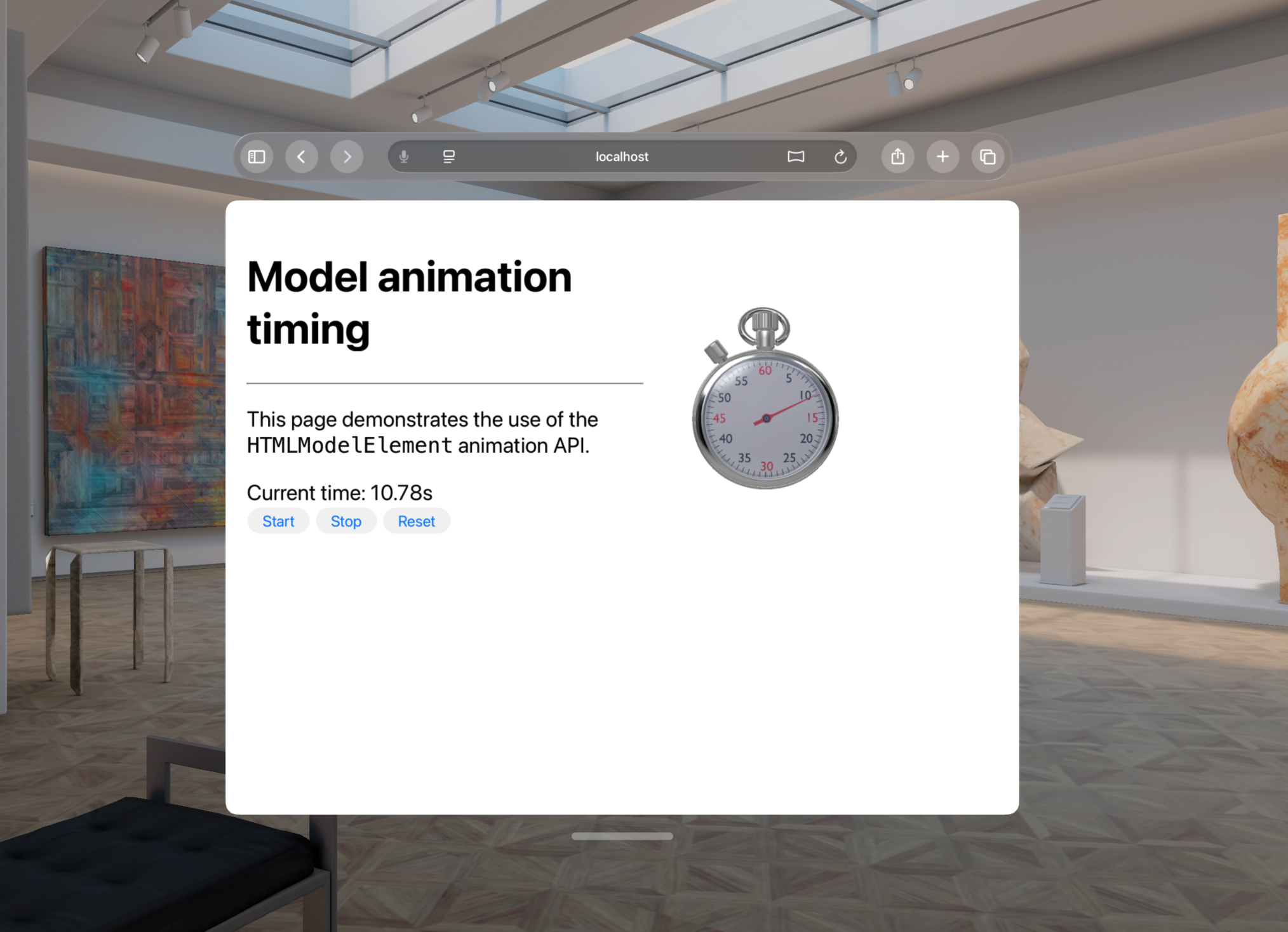

Animation

Finally, model files can have animated contents, using the familiar concept of an animation timeline. If there’s an animation in the source file, the model element will have a duration attribute, can be played and paused with play() and pause(). The animation can be seeked by setting the currentTime, and the animation’s speed can be changed by updating the playbackRate attribute. Finally, the default behavior of the element playback can be configured on declaratively with the autoplay and loop attributes in HTML.

Try it yourself: Using USDZ

If you’re creating experiences for AR Quick Look on iPhone and Vision Pro, you’ve got everything you need to get started. If you’re not familiar with USD, it’s short for “Universal Scene Description:” an industry-standard way of working with entire projects of 3D content, open sourced by Pixar in 2016 as OpenUSD. Today it’s supported by all major 3D software tools with help from the Alliance for OpenUSD. USDZ is a USD scene packed into a single file, ready to use in Quick Look for iOS, iPadOS and visionOS, and in model today in visionOS.

If you want to get started with the model element and don’t have any content yourself, you can find a great selection of USDZ model files in Reality Composer Pro, which comes with Xcode. If you have an iPhone or iPad with a LiDAR sensor, you can scan a 3D object with Object capture in Reality Composer, developed by Apple and free in the App store. Or if you want to get started with making your own models, the powerful and popular modeling tool Blender has excellent USD import and export built right in.

Blender – How to export to USDZ with embedded Textures

Reality Composer (on iPad) – exporting to USDZ

Polycam to Blender tutorial

Feature status and Feedback

The features described in this post conform to the API proposal being considered by the W3C for the Model Element and the HTML specification managed by WHATWG. It’s a work in progress, so we’d love to hear your feedback and see what you make. To share your thoughts on the HTML model element, find us on Mastodon at @jensimmons@front-end.social, @jondavis@mastodon.social, or on X at @zachernuk. Or send a reply on X to @webkit. You can also follow WebKit on LinkedIn. If you run into any issues, we welcome your feedback about WebKit at bugs.webkit.org. Or, if the issue involves technology deeper in the stack, file feedback with a sysdiagnose to provide information on how the operating system itself is being impacted. Filing issues really does make a difference. Thanks.