Introducing Natural Input for WebXR in Apple Vision Pro

Apple Vision Pro is here, and with it, a lot of excitement about the possibilities for WebXR in visionOS. Support is in progress, where you can test it today.

WebXR now includes a more natural and privacy-preserving method for interaction — the new transient-pointer input mode — available behind a flag for Safari 17.4 in visionOS 1.1. Let’s explore how natural input for WebXR works, and how to leverage it when developing a WebXR experience for Apple Vision Pro.

Background

WebXR lets you transform 3D experiences created in WebGL into immersive, spatial experiences available directly in the browser. Defined in web standards governed by the W3C, WebXR is an excellent choice for presenting immersive content to your users.

One challenge, though, is that because it’s completely immersive — and rendered entirely through WebGL — it’s not possible to provide interaction via DOM content or the traditional two-dimensional input available on a typical web page via a mouse or trackpad. This, combined with the fact that a spatial experience requires spatial input, means WebXR needs a totally new interaction model.

The interaction model of visionOS, known as natural input, uses a combination of eyes and hands for input. A user simply looks at a target and taps their fingers to interact. (Or uses the alternatives provided by accessibility features.)

The initial web standards for WebXR assumed all input would be provided by persistent hardware controllers. Since the natural input interaction model of visionOS differs from XR platforms which rely on listening to physical controllers and button presses, many existing WebXR experiences won’t work as intended on Apple Vision Pro.

We’ve been collaborating in the W3C to incorporate support for the interaction model of visionOS into WebXR. And we’re excited to help the WebXR community add support to popular WebXR frameworks.

Using Natural Input interactions

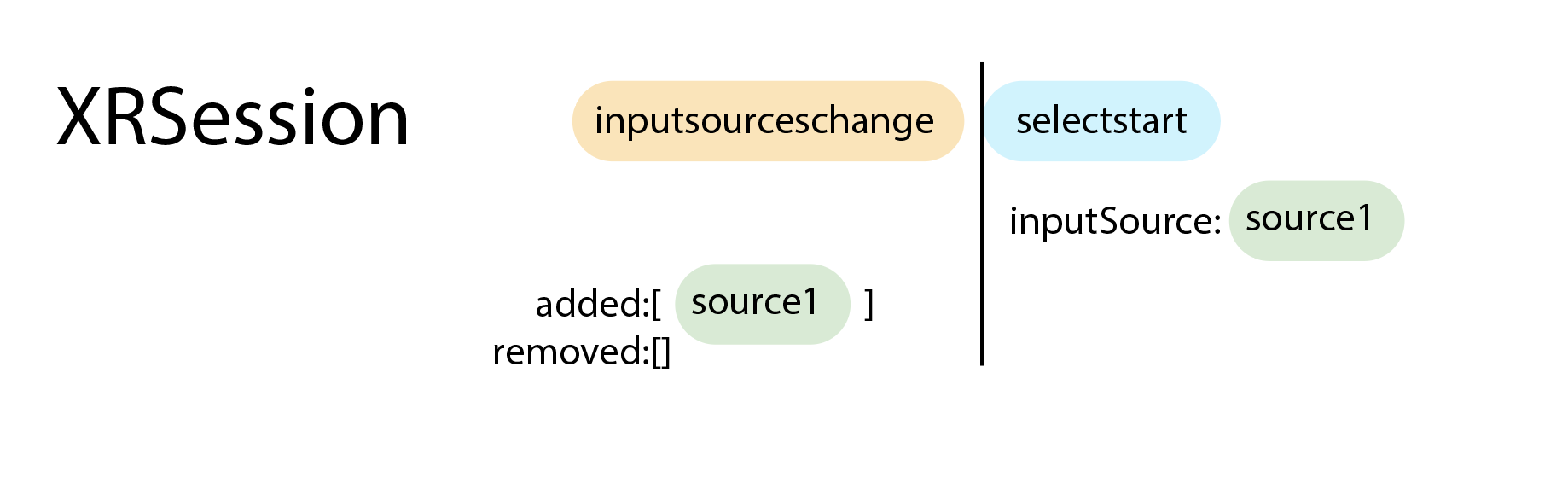

Since WebXR in visionOS requires the use of spatial inputs, rather than a trackpad, touch, or mouse, and the DOM isn’t visible within a WebXR session, inputs are provided as part of the XRSession itself. Events related to the input, e.g. select, selectstart and selectend are then dispatched from the session object. The XRInputSources are available within the xrSession.inputSources array. Because the default WebXR input in visionOS is transient, that array is empty — until the user pinches. At that moment, a new input is added to the array, and the session fires an inputsourceschange event followed by a selectstart event. You can use these for detecting the start of the gesture. To differentiate this new input type, it has a targetRayMode of transient-pointer.

Ongoing interaction

The XRInputSource contains references to two different positions in space related to the input: the targetRaySpace and the gripSpace. targetRaySpace represents the user’s gaze direction, this space begins with its origin between the user’s eyes and points to what the user was looking at the start of the gesture. The targetRaySpace is initially set to the direction of the user’s gaze but is updated with the movement of their hand rather than their eyes — that is, a movement of the hand to the left will move that ray to the left as well. The gripSpace represents the location of the user’s pinching fingers at the current point in time.

The targetRaySpace can be used for finding what the user wanted to interact with when they started the gesture typically by raycasting into the scene and picking the intersected object and the gripSpace can be used for the positioning and orientation of objects near the user’s hand for interaction purposes, e.g. to flip a switch, turn a dial or pick up an item from the virtual environment. To learn more about finding the pose of the input, see Inputs and input sources on MDN.

End of interaction

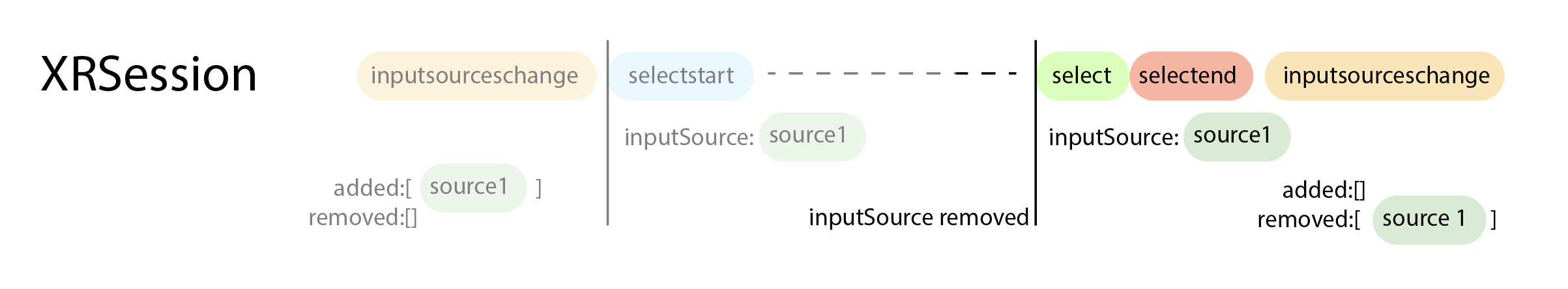

Three events are fired from the session object when the user releases the pinch.

First is a select and selectEnd event, both referencing the input as the event.inputSource object. The session then fires a final inputsourceschange event, indicating that this inputSource has been removed.

Benefits for simplicity and privacy

As each input represents a single, tracked point in space per pinch, and because it exists only for the duration of the user’s pinch, significantly less data about a user’s motion is required overall.

Combining transient inputs with hand tracking

WebXR on Safari in visionOS continues to support full hand tracking as well, supplying hand joint information for the duration of the experience. If the call to navigator.xr.requestSession has included hand-tracking as an additional feature and this is granted by the user, the first two inputs in the inputSources list will be standard tracked-pointers supplying this joint information. For information on requesting features in an XRSession, refer to the documentation at MDN. Because these inputs persist for the duration of the session, any transient-pointer inputs will appear further down the list. The hand inputs are supplied for pose information only and do not trigger any events.

Testing natural input on visionOS

Using the visionOS simulator

The new transient-pointer input mode works in the visionOS simulator, so you can test your Web XR experience without needing Apple Vision Pro. To get started:

- Download Xcode from the Mac App Store.

- Select the visionOS SDK for download and installation.

- Launch Xcode to complete the installation process. This installs a new application named “Simulator”. Learn more about using the visionOS Simulator.

- Open the Simulator app on macOS.

- Create a new visionOS simulator or open an existing one.

If you have a website open in Safari on macOS, you can easily trigger that page to open in Safari in visionOS Simulator. On Mac, select Develop > Open Page With > Apple Vision Pro. (If the Develop menu is not present in the menu bar, enable features for web developers.)

Enabling WebXR support

Whether you have Apple Vision Pro or are using visionOS Simulator, you’ll want to turn on support for WebXR. From the Home View, go to Settings > Apps > Safari > Advanced > Feature Flags and enable WebXR Device API.

Identifying potential issues in existing WebXR scenes

WebXR is provided in visionOS 1.1 for testing purposes only. If you encounter bugs in the browser, please don’t attempt to target a user-agent string to work around them. Instead, report them to us via Feedback Assistant.

When hand-tracking is enabled I can’t select anything.

Your experience probably is only looking at events which correspond to the first two inputs in the inputSources array. Many examples in the popular framework Three.js only act on events related to inputs at index 0 and 1. When hand tracking is enabled, the inputs corresponding to the hand tracking data appear in entries 0 and 1 but the transient-pointer style inputs appear in entries 2 and 3.

When I attach an object to the controller it appears at the user’s face.

The input’s targetRaySpace originates between the user’s eyes and points in the direction of their gaze. To place an object in the user’s hand, instead attach objects to the input’s gripSpace position, which corresponds to the location of the user’s pinch.

The selection ray to interact with objects lags between the position of my current selection and my last one.

Some frameworks attempt to smooth out controller motion by interpolating the position of the controller each frame. This interpolated motion should be reset if a controller is disconnected and reconnected. You may need to file an issue with the framework author.

Let us know

We can’t wait to see what you make. To share your thoughts on WebXR, find us on Mastodon at @ada@mastodon.social, @jensimmons@front-end.social, @jondavis@mastodon.social, or on X at @zachernuk. Or send a reply on X to @webkit. You can also follow WebKit on LinkedIn. If you run into any issues, we welcome your feedback (to include a sysdiagnose from Apple Vision Pro), or your WebKit bug report about Web Inspector or web platform features. Filing issues really does make a difference. Thanks.